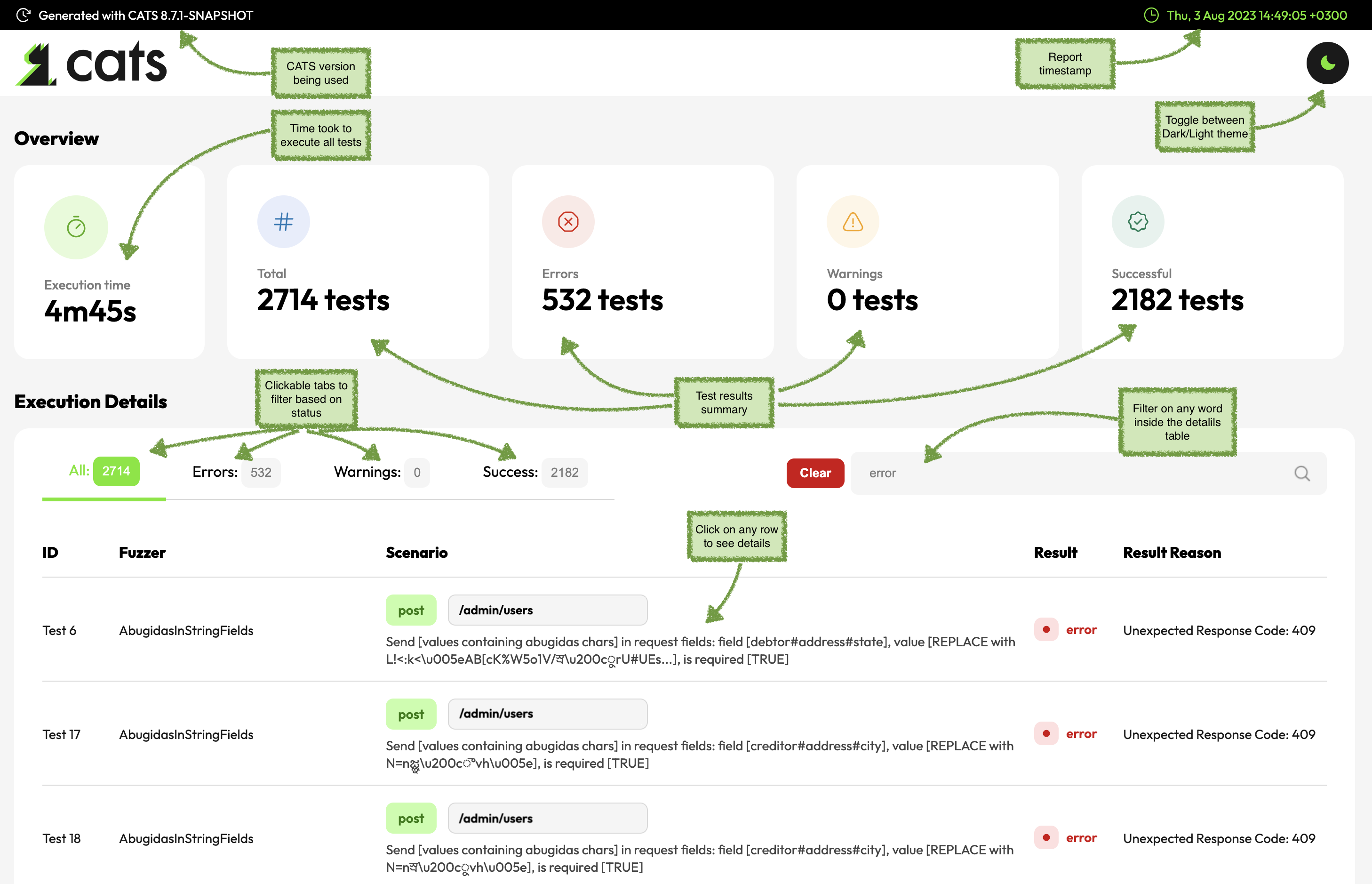

Interpreting Results

This is a typical CATS report summary page:

HTML_JS

HTML_JS is the default report produced by CATS. It has Javascript enabled in order to have some interaction with the reports.

The execution report is placed in a folder called cats-report which is created inside the current folder.

Opening the cats-report/index.html file, you will be able to:

- filter tests based on the result:

All,Success,WarnandError - omni-search box to be able to search by any string without the summary table

- see summary with all the tests with their corresponding path against they were run, and the result

- have ability to click on any tests and get details about the Scenario being executed, Expected Result, Actual result as well as request/response details

You can change cats-report to something else using the -o argument. cats ... -o /tmp/reports will write the CATS report in the /tmp/reports folder.

Please note that Javascript might not play well with the CI servers. You may choose the non-JS version when embedding the report into a CI pipeline.

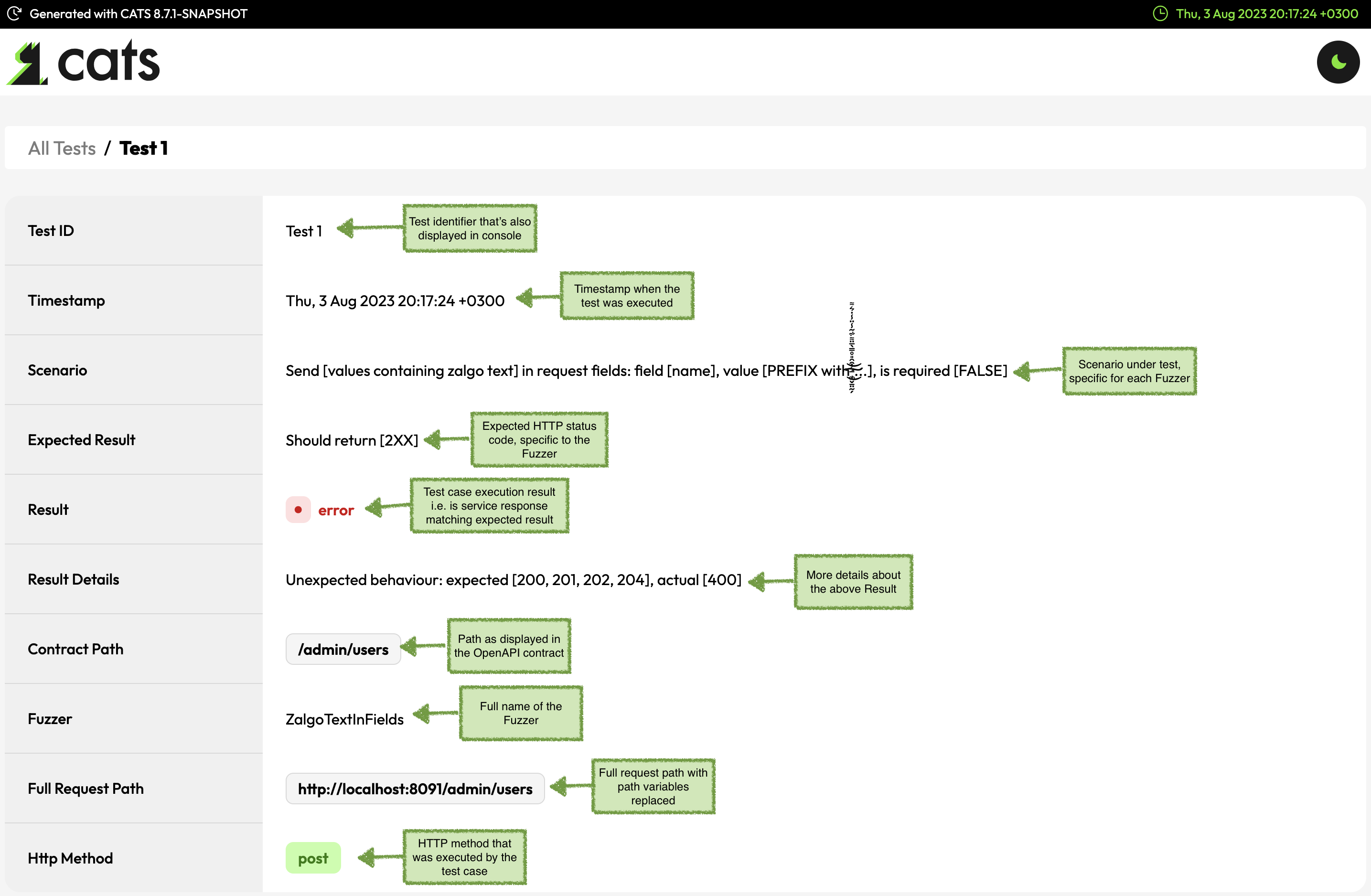

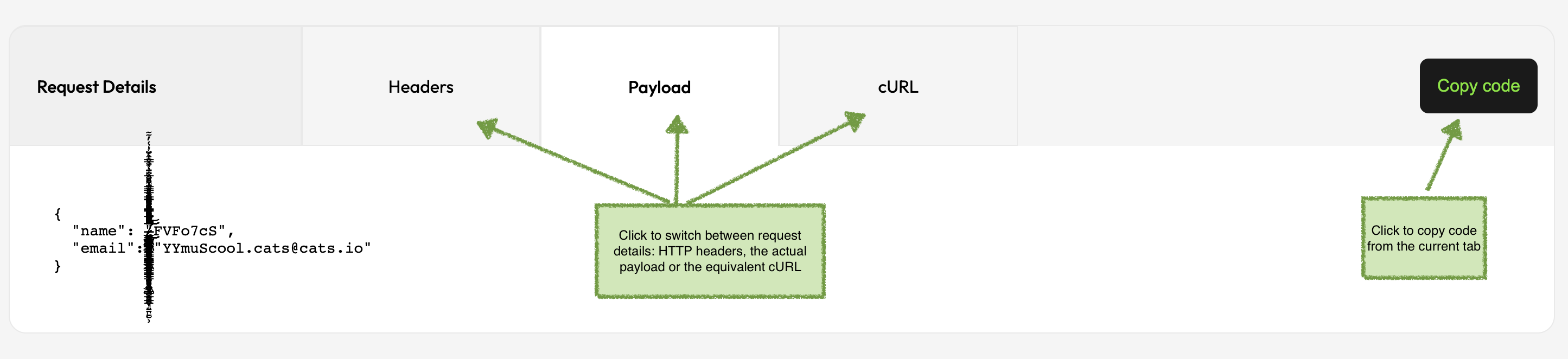

Along with the summary from index.html each individual test will have a specific TestXXX.html page with more details.

Individual tests are also written as JSON files. This is useful when you want to replay a test using cats replay TestXXX.

Tests can be uploaded as test evidence and be later reproduced/verified.

Understanding the Result Reason values:

Unexpected Exception- reported aserror; this indicates a possible bug in the service or a corner case that is not handled correctly by CATSNot Matching Response Schema- reported as awarn; this indicates that the service returns an expected response code and a response body, but the response body does not match the schema defined in the contractUndocumented Response Code- reported as awarn; this indicates that the service returns an expected response code, but the response code is not documented in the contractUnexpected Response Code- reported as anerror; this indicates a possible bug in the service - the response code is documented, but is not expected for this scenarioUnexpected Behaviour- reported as anerror; this indicates a possible bug in the service - the response code is neither documented nor expected for this scenarioNot Found- reported as anerrorin order to force providing more context; this indicates that CATS needs additional business context in order to run successfully - you can do this using the--refDataand/or--urlParamsargumentsResponse time exceeds max- reported as anerrorif the--maxResponseTimeInMsis supplied and the response time exceeds this numberResponse content type not matching the contract- reported aswarnif the content type received in response does not match the one defined in the contract for the received http response codeError details leak- reported aserrorif the response body contains sensitive informationNot Implemented- reported aswarnif response code is501Mass Assignment vulnerability detected- reported aserrorif the service allows mass assignmentServer error with injection payload- reported aserrorif the service returns is vulnerable to injection attacksSSRF payload reflected in response- reported aserrorif the service is vulnerable to SSRF attacksCloud metadata service accessed- reported aserrorif the service leaks cloud metadataFile content exposed via SSRF- reported aserrorif the service leaks file content via SSRFSensitive data leak- reported aserrorif the service leaks sensitive dataNetwork error reveals SSRF attempt- reported aserrorif the service is vulnerable to SSRF attacksDNS resolution error reveals SSRF attempt- reported aserrorif the service is vulnerable to SSRF attacksHTTP client error reveals SSRF attempt- reported aserrorif the service is vulnerable to SSRF attacksInternal target reflected in response- reported aserrorif the service is vulnerable to SSRF attacksMissing recommended security headers- reported aserrorif the http response does not have the recommended security headersMissing response headers- reported aserrorif the http response does not contain all the headers defined in the contractPotential IDOR vulnerability detected- reported aserrorif the service is vulnerable to IDOR attacksServer error with IDOR payload- reported aserrorif the service is vulnerable to IDOR attacks

This is what you get when you click on a specific test:

HTML_ONLY

This format is similar with HTML_JS, but you cannot do any filtering or sorting. This is more suitable when embedding the CATS report into a CI pipeline.

JUNIT

CATS also supports JUNIT output. The output will contain one testsuite per fuzzer. Each test run by that fuzzer will be recorded as a testcase.

As the JUNIT format does not have the concept of warning the following mapping is used:

- CATS

errorwith valid HTTP codes are mapped as JUNITfailure - CATS

errorwith code 9XX are mapped as JUNITerror - CATS

warnis reported as JUNITskippedand message starting withWARN -

The JUNIT report is written as junit.xml in the cats-report folder. Individual tests, both as .html and .json will also be created.

If you want to have history for CATS runs you can use the --timestampReports argument. This will create sub-folders for each run within the cats-reports folder with the corresponding timestamp.